Nearly every building used by humans was made possible by the risk industry. At the pinnacle of the risk industry sits the reinsurance industry, the companies that insure and cover the risks of every insurance company in the world. Every company that works in Architecture, Engineering, Construction (AEC) and builds structures needs Errors and Omissions and General Liability (EO/GL) and other specialized insurance policies to operate. So when the major reinsurers post warnings about performance problems, it would behoove the industry to pay attention.

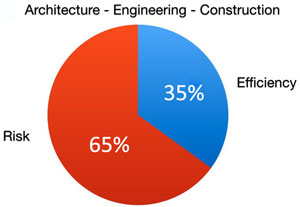

Simple risk can be evaluated on a scale of 1 to 100, and for every negative point with respect to efficiency and quality, you add a point on the risk side. If your industry is seen as 35% efficient in its purpose, it is then 65% inefficient, or 65% at risk. It’s on the risk side that dragons lie, the precursors of everything that will go wrong.

In 2008 the reinsurance industry took a jolt with millions of perceived triple-A-rated companies being decimated. This led to questioning exactly what the third party rating companies had missed and whether a new methodology was needed as a double blind verification of ratings, to protect against surprises and future disaster. Teams of consultants and analysts around the world are now using different forms of due diligence to shine light on future issues.

In North American AEC, especially with regard to buildings for human use, extensive issues are being detected stemming from the industry’s failure to understand, or to wish to understand, the building’s purpose toward the humans who occupy the structure. In truth, a building can only be considered “successful” if there is a demonstrated feedback mechanism validating success for whatever human task is occuring in it. A hospital should be rated as architecturally successful based on its ability to heal patients, a home based on its ability to provide human comfort, and so on. The building’s ability to facilitate the success of the human task that is taking place inside it is what insurers rate on a scale of efficiency.

At a recently widely discussed series of meetings among reinsurers some staggering focus problems came to the fore. These focus problems are perceived by the reinsurance industry as being the most likely to cause large future claims liability. Ranked in priority order, the risk industry rates building success on how well the building achieves:

A. Human comfort (the prime directive, which includes thermal comfort and air quality)

B. Human safety and health

C. Durability

D. Energy efficiency

Each objective was prioritized based on its own risk matrix, and prioritization and interdependence was validated in several parts of the industry. While worldwide the AEC industry is presently focused on objective D, with a secondary consideration of C, the objectives that reinsurers consider the prime items —A and B—are essentially ignored.

If the risk industry weights success in item A at 50% of the efficiency calculation, item B at 30%, item C at 15%, and item D at 5%, then the current AEC industry focus will, at best, yield only 20% efficiency, and, accordingly, 80% risk. Further, if interdependencies are examined, A and B can operate independently from C and D, but C and D can have a negative effect on A and B unless C and D are deliberately designed to enhance A and B—which in today’s world they are absolutely not. Put another way, if the prime directive of the entire exercise is A, then all other tasks must be subordinate to and focused on achieving A, or risk increases.

In the US Department of Labor’s standardized measurements, AEC industry efficiency has been continually dropping, down to today’s estimate of 35%, which translates to 65% risk. This can be cross-referenced to the steady increase in building soft costs, inclusive of EO and GL, which (eliminating tax offsets, subsidies, and falsification of liability levels by artificially limiting recourse through contract language) have today reached 50+% .

As we move into increasing data-dependency in how we measure adherence to the prime directive, we see industry inefficiencies starkly illuminated by industry’s claims of superior efficiency based only on mechanical and physical building performance at the component or system level. This deliberate avoidance of any metrics around the prime directive, from the risk management viewpoint, only increases risk.

For example, HVAC systems are the life support of any building. ALL HVAC systems are rated on mechanical performance of the system, with zero focus on the results. The stated purpose of the system is to create human thermal comfort and provide air quality through ventilation, but neither of those are measured. AC means air conditioning, not just cooling, and comfortable air is a lot more than establishing a CO2 and temperature level threshold. This becomes especially problematical with 60,000 chemicals used in AEC, the impact on humans of only 5% of which is vaguely understood, with very tight envelopes, all dependent on a conditioning system that has no comprehension of the human reactions to these chemicals. Again, efficiency and quality plummet, and risk increases.

Artificial Intelligence systems are being created today that link parametric models to buildings and building designs to ascertain standard design performance metrics. Exergy is a thermodynamic concept that encompasses the answers to the prime directive, and it needs to be pulled out of its complexity rabbit hole and made comprehensible to mere mortals (no offence to ASHRAE 55 which is trying). A building can have stellar EE or LEED rating, but if no attention has been paid to the prime directive, then the total eXergy is low and risk is high. Most US buildings have an eXergy rating of 15%, leaving them with an unacceptable 85% risk level and abysmal failure at providing what the humans involved need. Consider that if a building does not focus on the prime directive A, the humans in it are likely to do anything in their power to achieve comfort, and in the process violate B, C, and D until A is accomplished.

Purpose logic trees can also illuminate problems: if directives A and B are ignored, and the focus is on C and D, then the building has no raison d’être. Risk is high, and it will soon be demolished to make way for another mistake until the prime directive is accidentally realized.

The prime directive is also the defining quality objective, and how well it is attained defines the quality level—which in turn defines the success of the prime objective. Deming many years ago pointed out that any industry that focuses on costs will discover that quality diminishes, and any industry that focuses on quality will reduce costs (which the staggering advances in computer technology prove every day). Technology, however, is not a panacea for an industry facing the wrong way in history.

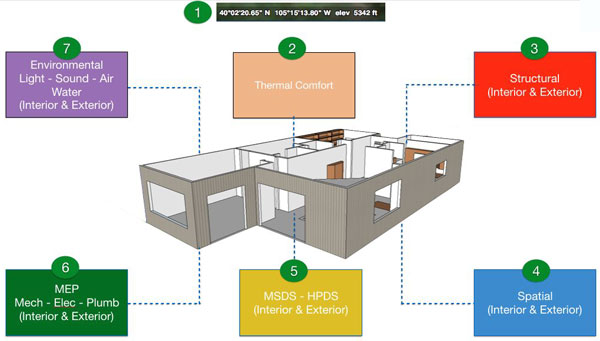

In the same risk research it was found that understanding and achieving the prime directive entailed having very specific information available for both the human and (in the not too distant future) the artificial intelligence tracking and resonating with the real world.

- Geospatial location defines the exterior influences on the project

- The thermal comfort and air quality indices must be designed to measure the capacity to deliver objectives A and B through environmental controls.

- Structural information defines the capacity of components to deliver objectives A and B.

- Spatial information describes the building’s relationship to human scale and activity, which directly impacts the capacity to deliver objectives A and B.

- Material safety data sheet (MSDS) / health product declaration sheet (HPDS) data models datasets provide understanding of how chemical components are used in the structure to deliver objectives A and B.

- MEP (mechanical electrical plumbing) information describes the capacity of those components to deliver objectives A and B.

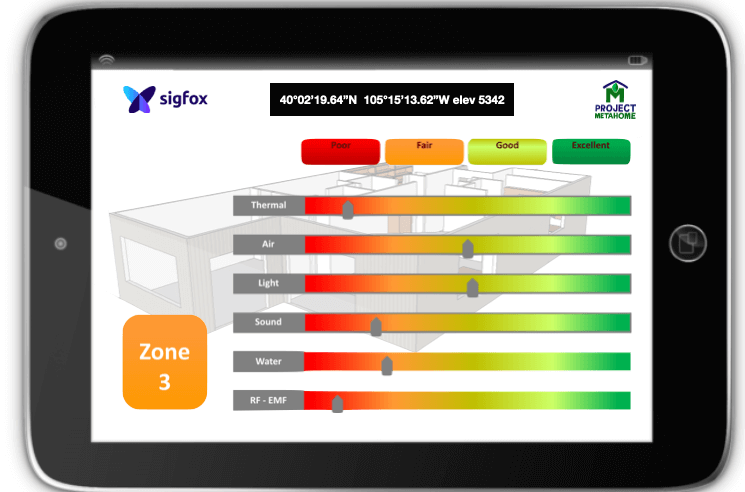

- Environmental assessment from the human perspective is a Quality of Experience (QoE) matrix of how light, sound, air, and water deliver objectives A and B. All the above parameters feed into the QoE rating.

Often when challenged on the quality of a building’s function, the industry response is “well, it’s to code.” The original purpose of code was to address objective B, safety, without any thought of the prime directive A. Code-centric response from the industry inevitably pushes up risk, as the responder has just admitted to ignoring the prime objective of the building in its relation to its inhabitants. This could be seen as an acknowledgement that requirements of the EO/GL policy have been ignored, potentially invalidating the policy coverage.

AI models are being built that link to the structure’s parametric models and building automation system and can report exactly what quality levels of thermal comfort / air / light / sound are being supplied. These communicate to the risk carriers, who are aware that poor quality levels drive up risk and can cause incalculable damage to the human occupants. Most AEC systems tend to look at this as beyond the scope of their responsibility, but from the risk point view if you design / engineer / build a structure, then you are responsible for its performance. When EO/GL policy coverage is focused on minimum performance levels, this will be a large carrot and stick to promote improvement and accountability.

It has become standard procedure for a contractor, with the architect and engineer, to test a building’s functions when it is empty, before the inhabitants arrive, and then allocate a break-in period to try and adjust the systems to whatever the humans are doing. In 100% of cases, this leads to incessant finger pointing and blaming poor performance on the support teams operating the building. This naturally drives up risk on multiple levels. In the near, future we could see risk carriers holding that if the AEC team cannot demonstrate adequate performance levels to the prime directive for no less than 5 years of the building’s lifespan. then the AEC team will be held at fault for any claims arising out of poor quality thermal comfort / air / light / sound.

In most US cities (dumb or smart) little more than 15% of the above listed information about a building is available in any format. Thus, monitoring, validating, or planning becomes horrendously complex or even impossible. This turns smart city objectives into an oxymoron, and exposes an 85% risk situation to the reinsurance industry.

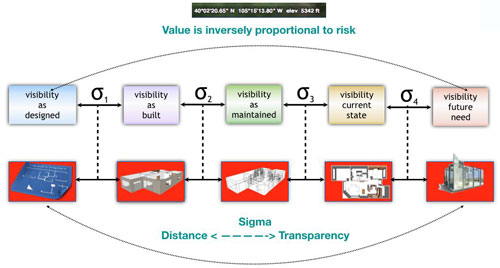

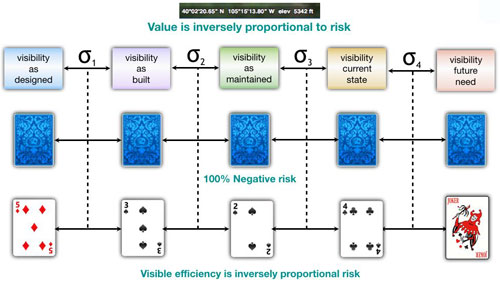

After a project is complete, information retention and validation systems are needed to confirm compliance with the project’s prime objective. The systems need to be consistent, transparent, and cross verifiable. The way risk analysis does this is with the calculation of sigma (variance from project goal) between various states. Keeping information about the project in different datasets again drives up risk. If, for instance, the geospatial data is in ArcView or paper maps, and the design information is scattered in CAD or BIM, with the maintenance information somewhere else again, this would mean that there is no quantifiable or verifiable way to measure the sigma from one state to the next, and this pushes up risk. The lower the sigma from one state to the next, the lower the risk projection.

Imagine that each state of the project (as designed, as built, as maintained, as it currently is, and as it needs to be in the future) is described by a single playing card. Turn all five cards face down, making project states unreadable and unverifiable, and you have 100% risk. Now turn over the as-built card, and let’s say it’s a 5, representing an as-built state of 50% compliance and 50% risk. Do this with all the cards and now you have a full picture of compliance with the prime and other directives. Now remove any of the cards and place it in another room; this represents much of today’s information accessibility—the data needed to accurately quantify risk is missing and unobtainable.

Risk management systems can now focus not only on prime directive performance data but also on the systems that should be maintaining project focus. Insurance policies in the not too distant future will specify the types of information systems needed to be in compliance with EO/GL and other coverage and how the data needs to be collected and organized. Companies that allow the risk carriers real time access to their data will be classified as a much better risk, resulting in savings in soft costs.

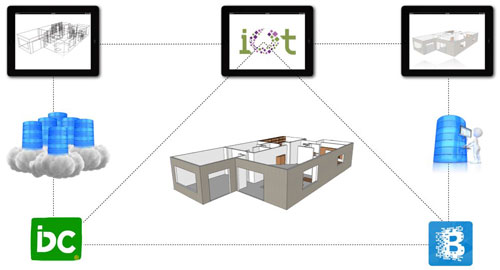

Many of the tools that caused risk management so many problems with on time transparency and verification of problems in 2008 have become obsolete and are being swept aside by such tools as Intelligent Direct Current (IDC) which turns the building’s energy system into a massive data grid supplying power and services as and when needed to achieve specific QoE; parametric modeling, which establishes a virtual twin of the building’s performance (A, B, C, D) targets; Internet of Things (IoT) sensors that measure, monitor, and adjust all (A, B, C, D) quality of service levels; and lastly but not least blockchain services that enable real time mini contracts and payments aligned to the A, B, C, D metrics to flow 365-24/7 to all interested parties. Blockchain especially is being considered the tool of choice for risk management fiscal flow as it enables automatic risk reward calculations to be seen in real time from every aspect, and that includes the whole AEC cycle through the life of the building—and even in many cases what the occupants of the building are doing. Add to this artificial intelligence (AI) overseeing the system, and the world turns upside down for the better.

Third party certification that uses textual or math theory unconnected to reality to certify a physical process as being in compliance proved in 2008 to be catastrophic. Risk managers depended on AAA, 5 star, LEED, Baldridge, or other variants of quality assurance systems purporting to demonstrate physical quality through static documentation 85% of the performance guarantees inferred by those systems proved to be flat out unachievable.

It has long been assumed that insuring bad behavior (such as building in floodplains and wildfire or earthquake prone areas) was acceptable at a specific cost. The AEC industry has long seemed to believe that natural laws of fluid dynamics or thermodynamics could be overcome by brute force in the built environment (levees and air conditioners, e.g.), and if the brute force solution fails, well, failure is insured against so why worry. But the master reinsurers may not have the capital capability to continue to feed this behavior, as was shown clearly in 2008. When the risks incumbent on ignorance of the prime directive are also taken into account, insuring bad behavior will no longer be possible.

In very simple terms, as Richard Feynman so eloquently said after the Challenger accident, “For a successful technology, reality must take precedence over public relations, for Nature cannot be fooled.” When you disobey fundamental physical laws such as thermodynamics, there is a direct human cost and that in turn drives up risk—period.

Fire hose sized streams of data on buildings are becoming available worldwide to be sliced and diced anyway you wish. They are conclusively proving that well-built structures targeted to deliver, and actually delivering, the prime objective are less expensive to create, operate, and maintain; last longer; and present much lower risk than non focused or incorrectly focused expensive structures. In other countries, the followers of Building Biology, Passive House, Biophlia have been making headway in transmitting this message for many years, and now it’s North America’s turn for a wake up call.

Resiliency and better structures that achieve objectives A and B will require industry efficiency quality standards in the 80th percentile and higher, which will demand a very different approach from that which has currently failed us. Different management and project tools will be needed, and a very different thought process. This is the challenge facing the brilliant students pouring out of of universities today: To achieve the prime directive and live happily ever after.